The AWS Certified Machine Learning Specialty validates expertise in building, training, tuning, and deploying machine learning (ML) models on AWS.

Use this App to learn about Machine Learning on AWS and prepare for the AWS Machine Learning Specialty Certification MLS-C01.

Download AWS machine Learning Specialty Exam Prep App on iOs

Download AWS Machine Learning Specialty Exam Prep App on Android/Web/Amazon

[appbox appstore 1611045854-iphone screenshots]

[appbox microsoftstore 9n8rl80hvm4t-mobile screenshots]

Download AWS machine Learning Specialty Exam Prep App on iOs

Download AWS Machine Learning Specialty Exam Prep App on Android/Web/Amazon

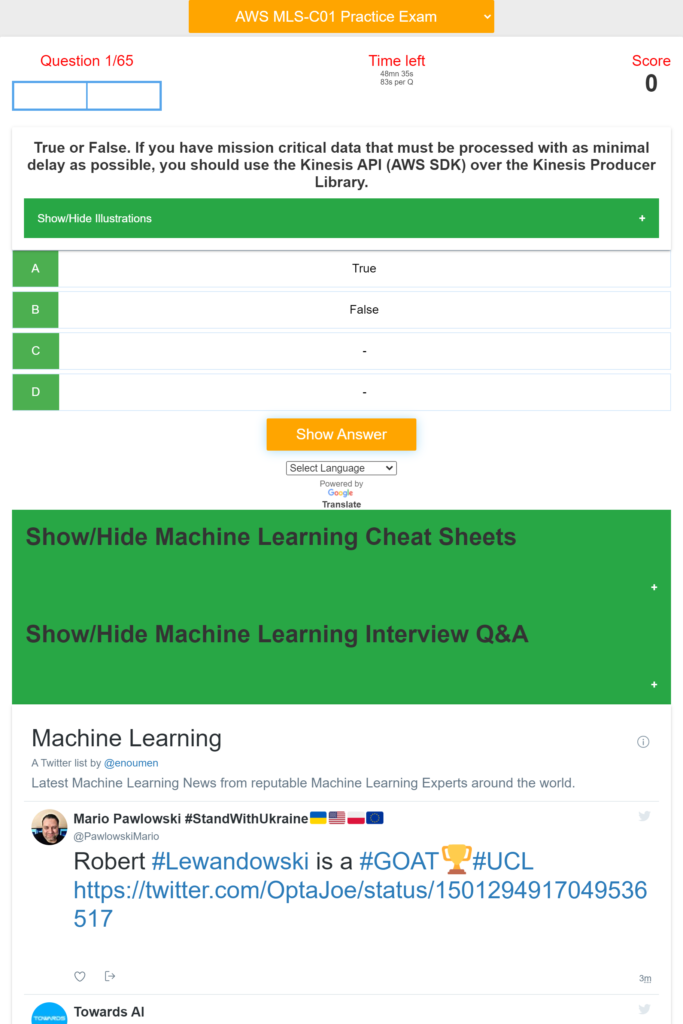

The App provides hundreds of quizzes and practice exam about:

– Machine Learning Operation on AWS

– Modelling

– Data Engineering

– Computer Vision,

– Exploratory Data Analysis,

– ML implementation & Operations

– Machine Learning Basics Questions and Answers

– Machine Learning Advanced Questions and Answers

– Scorecard

– Countdown timer

– Machine Learning Cheat Sheets

– Machine Learning Interview Questions and Answers

– Machine Learning Latest News

The App covers Machine Learning Basics and Advanced topics including: NLP, Computer Vision, Python, linear regression, logistic regression, Sampling, dataset, statistical interaction, selection bias, non-Gaussian distribution, bias-variance trade-off, Normal Distribution, correlation and covariance, Point Estimates and Confidence Interval, A/B Testing, p-value, statistical power of sensitivity, over-fitting and under-fitting, regularization, Law of Large Numbers, Confounding Variables, Survivorship Bias, univariate, bivariate and multivariate, Resampling, ROC curve, TF/IDF vectorization, Cluster Sampling, etc.

Domain 1: Data Engineering

Create data repositories for machine learning.

Identify data sources (e.g., content and location, primary sources such as user data)

Determine storage mediums (e.g., DB, Data Lake, S3, EFS, EBS)

Identify and implement a data ingestion solution.

Data job styles/types (batch load, streaming)

Data ingestion pipelines (Batch-based ML workloads and streaming-based ML workloads), etc.

Domain 2: Exploratory Data Analysis

Sanitize and prepare data for modeling.

Perform feature engineering.

Analyze and visualize data for machine learning.

Domain 3: Modeling

Frame business problems as machine learning problems.

Select the appropriate model(s) for a given machine learning problem.

Train machine learning models.

Perform hyperparameter optimization.

Evaluate machine learning models.

Domain 4: Machine Learning Implementation and Operations

Build machine learning solutions for performance, availability, scalability, resiliency, and fault

tolerance.

Recommend and implement the appropriate machine learning services and features for a given

problem.

Apply basic AWS security practices to machine learning solutions.

Deploy and operationalize machine learning solutions.

Machine Learning Services covered:

Amazon Comprehend

AWS Deep Learning AMIs (DLAMI)

AWS DeepLens

Amazon Forecast

Amazon Fraud Detector

Amazon Lex

Amazon Polly

Amazon Rekognition

Amazon SageMaker

Amazon Textract

Amazon Transcribe

Amazon Translate

Other Services and topics covered are:

Ingestion/Collection

Processing/ETL

Data analysis/visualization

Model training

Model deployment/inference

Operational

AWS ML application services

Language relevant to ML (for example, Python, Java, Scala, R, SQL)

Notebooks and integrated development environments (IDEs),

S3, SageMaker, Kinesis, Lake Formation, Athena, Kibana, Redshift, Textract, EMR, Glue, SageMaker, CSV, JSON, IMG, parquet or databases, Amazon Athena

Amazon EC2, Amazon Elastic Container Registry (Amazon ECR), Amazon Elastic Container Service, Amazon Elastic Kubernetes Service , Amazon Redshift

Important: To succeed with the real exam, do not memorize the answers in this app. It is very important that you understand why a question is right or wrong and the concepts behind it by carefully reading the reference documents in the answers.

Note and disclaimer: We are not affiliated with Microsoft or Azure or Google or Amazon. The questions are put together based on the certification study guide and materials available online. The questions in this app should help you pass the exam but it is not guaranteed. We are not responsible for any exam you did not pass.

Download AWS machine Learning Specialty Exam Prep App on iOs

Download AWS Machine Learning Specialty Exam Prep App on Android/Web/Amazon

- [P] fraud detection modelby /u/Puzzleheaded_Club_68 (Machine Learning) on May 3, 2024 at 10:04 am

i am trying to make a fraud detection model using an imbalanced data set with fraud being so much higher than un fraud the data set is as follows Time-> object of this format 1/1/2019 0:00 merchant->string category->string trans_num->string card_number->float64 amount->float64 name->string is_fraud-> either 0 or 1 i tried to preprocess the data first by dropping the merchant,name , category,trans_num.card num fields and to convert time from this format 1/1/2019 0:00 to hour,second,year,month,day and dropping time then using logistic regression but the accuracy is low any ideas how to preprocess the data and what algorithm to use? submitted by /u/Puzzleheaded_Club_68 [link] [comments]

- [R] HGRN2: Gated Linear RNNs with State Expansionby /u/SeawaterFlows (Machine Learning) on May 3, 2024 at 9:47 am

Paper: https://arxiv.org/abs/2404.07904 Code: https://github.com/OpenNLPLab/HGRN2 Standalone code (1): https://github.com/Doraemonzzz/hgru2-pytorch Standalone code (2): https://github.com/sustcsonglin/flash-linear-attention/tree/main/fla/models/hgrn2 Abstract: Hierarchically gated linear RNN (HGRN, Qin et al. 2023) has demonstrated competitive training speed and performance in language modeling, while offering efficient inference. However, the recurrent state size of HGRN remains relatively small, which limits its expressiveness. To address this issue, inspired by linear attention, we introduce a simple outer-product-based state expansion mechanism so that the recurrent state size can be significantly enlarged without introducing any additional parameters. The linear attention form also allows for hardware-efficient training. Our extensive experiments verify the advantage of HGRN2 over HGRN1 in language modeling, image classification, and Long Range Arena. Our largest 3B HGRN2 model slightly outperforms Mamba and LLaMa Architecture Transformer for language modeling in a controlled experiment setting; and performs competitively with many open-source 3B models in downstream evaluation while using much fewer total training tokens. submitted by /u/SeawaterFlows [link] [comments]

- [R] A Primer on the Inner Workings of Transformer-based Language Modelsby /u/SubstantialDig6663 (Machine Learning) on May 3, 2024 at 9:46 am

Authors: Javier Ferrando (UPC), Gabriele Sarti (RUG), Arianna Bisazza (RUG), Marta Costa-jussà (Meta) Paper: https://arxiv.org/abs/2405.00208 Abstract: The rapid progress of research aimed at interpreting the inner workings of advanced language models has highlighted a need for contextualizing the insights gained from years of work in this area. This primer provides a concise technical introduction to the current techniques used to interpret the inner workings of Transformer-based language models, focusing on the generative decoder-only architecture. We conclude by presenting a comprehensive overview of the known internal mechanisms implemented by these models, uncovering connections across popular approaches and active research directions in this area. https://preview.redd.it/57y44wwdn6yc1.png?width=1486&format=png&auto=webp&s=7b7fb38a59f3819ce0d601140b1e031b98c17183 submitted by /u/SubstantialDig6663 [link] [comments]

- [D] Help: 1. Current PhD position is alright? 2. (3d) computer vision; point cloud processing, is my Research Roadmap correct?by /u/Same_Half3758 (Machine Learning) on May 3, 2024 at 9:20 am

Currently I am PhD student (in the middle of the second semester) = (almost 7 months), particularly I am focusing on point cloud research for classification and segmentation all on my own. no guidance from my prof or fella Ph.D. (s). I have tow particular questions: should I drop out my Ph.D. under current supervisor? why? because almost there is no supervision and guidance? in a world with this huge of knowledge and fast going research, is it possible to end up with a satisfactory PhD on my own? considering that still my understanding for the field (point cloud processing) and DL is quite elementary. while I have the courage to work even though it is quite difficult to not having a fertile environment. should I quit and find a better place to pursue my research in? how bad is this situation? lastly, I am more concerned about my research strategy which is kinda on the fly actually. previously. from the beginning of the program until 3 months ago, I was solely reading groundbreaking papers i.e. pointnet, pointnet ++, point transformer series. I spent 3-4 months only exploring the very surface of the field, because it was my first interaction with the field and also honestly I did not have very good understanding of deep learning either. just grasped simple and high level concepts and ideas. but around 3 months ago, i realized this way I never come up with my own idea and contribute to the field. lack of knowledge coupled with absolute zero supervision and this naïve reading was not promising. so, i decided this time starting from scratch with Pointnet paper go deep and understand end to end of it. concepts in the paper, and its code implementation, which is still ongoing. I definitely feel I am learning. but the thing is: what should be my next step? particularly, there are different methods that have been used and structured literature in the field. so, should i pursue same strategy in many directions or just stick to one for a long time? I do not know even what are the exact options I have here! 🙁 I hope it is clear enough. submitted by /u/Same_Half3758 [link] [comments]

- [D] Fine-tune Phi-3 model for domain specific data - seeking advice and insightsby /u/aadityaura (Machine Learning) on May 3, 2024 at 7:10 am

Hi, I am currently working on fine-tuning the Phi-3 model for financial data. While the loss is decreasing during training, suggesting that the model is learning quite well, the results on a custom benchmark are surprisingly poor. In fact, the accuracy has decreased compared to the base model. Results I've observed: Phi-3-mini-4k-instruct (base model): Average domain accuracy of 40% Qlora - Phi-3-mini-4k-instruct (fine-tuned model): Average domain accuracy of 35% I have tried various approaches, including QLora, Lora, and FFT, but all the results are poor compared to the base model. Moreover, I have also experimented with reducing the sequence length to 2k in an attempt to constrain the model and prevent it from going off-track, but unfortunately, this has not yielded any improvement. I'm wondering if there might be issues with the hyperparameters, such as the learning rate, or if there are any recommendations on how I can effectively fine-tune this model for better performance on domain-specific data. If anyone has successfully fine-tuned the Phi-3 model on domain-specific data, I would greatly appreciate any insights or advice you could share. Thank you in advance for your help and support! qlora configuration: sequence_len: 4000 sample_packing: true pad_to_sequence_len: true trust_remote_code: True adapter: qlora lora_r: 256 lora_alpha: 512 lora_dropout: 0.05 lora_target_linear: true lora_target_modules: - q_proj - v_proj - k_proj - o_proj - gate_proj - down_proj - up_proj gradient_accumulation_steps: 1 micro_batch_size: 2 num_epochs: 4 optimizer: adamw_torch lr_scheduler: cosine learning_rate: 0.00002 warmup_steps: 100 evals_per_epoch: 4 eval_table_size: saves_per_epoch: 1 debug: deepspeed: weight_decay: 0.0 https://preview.redd.it/7afyhxcjv5yc1.png?width=976&format=png&auto=webp&s=1ce3efe6df6e4533bad5ec2f23e4f4968736bd56 submitted by /u/aadityaura [link] [comments]

- [R] postive draws for bioDrawsby /u/h2_so4_ (Machine Learning) on May 3, 2024 at 6:57 am

I'm a beginner in python. Please help me with the following situation. My research is stuck. Consider the following equation in which have to generate random values (currently have set the method to NORMALMLHS). . L1 =c+sigmaL1 * bioDraws (E_L1','NORMAL_MLHS) . where L1 is an endogenous variable, c is an estimale constant for which the lower bound is 0. the lower bound for sigmaL1 is also 0. Which method can use instead of 'NORMAL_MLHS' to ensure that it generates positive values and hence L1 is positive? submitted by /u/h2_so4_ [link] [comments]

- [D] Distance Estimation - Real World coordinatesby /u/Embarrassed_Top_5901 (Machine Learning) on May 3, 2024 at 5:38 am

Hello, I'm sorry for resposting this question again but this is very important and I need assistance. I have three cameras in a room in different locations ( front, left and right wall). I should be able to find distance among humans in the room in meters. I performed camera calibration for all the cameras. I tried matching the common points using SIFT, and then performed DLT method but the values are way off and not even close to the actual values. I tried stereo vision as well but that is not giving me close values as well. I also have distanced between cameras in meters too. I'm a beginner in computer vision and I should complete this task soon but I have been stuck with this since one month and I'm getting tired as I'm not able to solve this issue and I'm running out of solutions. I would really appreciate if someone helps me and guide me in the right direction. Thanks a lot for your help and time 😄 submitted by /u/Embarrassed_Top_5901 [link] [comments]

- [R] Iterative Reasoning Preference Optimizationby /u/topcodemangler (Machine Learning) on May 3, 2024 at 3:20 am

submitted by /u/topcodemangler [link] [comments]

- [D] Looking at hardware options for an AI/LLM development machine for work. Training and inference on small-to-mid sized models. Lost in hardware specs -- details in post.by /u/IThrowShoes (Machine Learning) on May 3, 2024 at 1:26 am

Greetings, At work I've been tasked with researching and developing some stuff around using LLMs in tandem with our in-house software suite. I can't go into many details due to policies, but it would eventually involve some PII identification/extraction, some document summarization, probably a little bit of RAG, etc. Over the last month or two, I've done some preliminary groundwork using very small models to show that something "is possible", but we'd like to take it to the next level. At this point I've been using a combination of my laptop's GPU (just a mobile RTX 3060) and my boss' RTX 4080 on an AMD threadripper machine. The 3060 falls over pretty quickly even on some of the smaller models, but the 4080 does pretty good at inferencing. But as you'd imagine I run out of VRAM pretty quickly trying to do anything slightly more robust. Part of my marching orders is to spec out some hardware for use in a local development machine/desktop. We have already put in an order for more production-grade hardware with a very sizable amount of VRAM (I think it hovers in at around 1 terabyte of VRAM, but not 100% sure) for use in our datacenter, but that wont arrive for a few months at least. With that, I am looking for some recommendations for a development workstation. I can't quite come to the conclusion if I should run multiple GPUs, or shell out for something that has more VRAM built-in. For example, do I run dual 3090s? Do I run an A6000 or two? Or one? Would a single RTX 6000 Ada (48GB) be sufficient? Given that: This is for development only, not production I want to inference small-to-mid sized models (probably up to 30b params) I probably want to fine tune small-to-mid sized models, if anything as a point of comparison. Even using LoRA/QLoRA Fine-tuning would be done on the Python side, and inferencing would be done using HuggingFace's candle library for Rust Using something cloud-based is discouraged on my end (can't go into details), and whatever software gets built that eventually lands in production can't talk with any external API anyways I dont mind using quantized models for development, but at some point I'd like to try on full precision models (which may have to wait for the production hardware to show up) I would say money is not a factor, but if I can budget something under $15k that'd be ideal What would you all recommend? Thanks! submitted by /u/IThrowShoes [link] [comments]

- [R] Language settings in PrivateGPT implementationby /u/povedaaqui (Machine Learning) on May 2, 2024 at 10:07 pm

Hello. I'm running PrivateGPT in a language other than english, and I don't get very well how the language settings work. Based in the example file, does it mean that when the first three parameters match, the prompt style will be set (in this case, "llama2")? I'm looking for the best setting possible for the foundational model I'm using for langagues different than english. settings-en.yaml: local: llm_hf_repo_id: TheBloke/Mistral-7B-Instruct-v0.1-GGUF llm_hf_model_file: mistral-7b-instruct-v0.1.Q4_K_M.gguf embedding_hf_model_name: BAAI/bge-small-en-v1.5 prompt_style: "llama2" For example, for phi3: phi3: llm_hf_repo_id: microsoft/Phi-3-mini-4k-instruct-gguf llm_hf_model_file: Phi-3-mini-4k-instruct-q4.gguf embedding_hf_model_name: nomic-ai/nomic-embed-text-v1.5 prompt_style: "phi3" submitted by /u/povedaaqui [link] [comments]

- [D] Good strategies / resources to improve MLOps skills as a PhD student / researcherby /u/fliiiiiiip (Machine Learning) on May 2, 2024 at 9:52 pm

A lot of researchers / PhD students in ML have prospects of joining the industry eventually (in US about 80% of ML PhDs are in the industry, according to the recently released Stanford's AI Index). What are some good tips / resources for someone to ensure he develops more practical & deployment-oriented MLOps skills? For example - setting up clusters, relevant cloud services (e.g. AWS), Docker, Kubernetes, developing internal tools for model training / data labelling... Stuff like that. submitted by /u/fliiiiiiip [link] [comments]

- [Discussion] Should I go to ICML and present my paper?by /u/meni_s (Machine Learning) on May 2, 2024 at 9:30 pm

I finished my Ph.D. a year ago. Left academia and went to be a data scientist at a tech company. I like it, but still thinking about moving to a more research position somehow in the future. Not sure though. Anyway, an unfinished work of mine got picked by a friend which finished it and applied to ICML. It got accepted (yay!). I now wonder - beside the fact that I find conferences fun, is there an actual benefit in attending? Presenting the paper? I know that for academic / researchers, this is a great opportunity to meat people and hear about current research. But as I'm not there anymore, is there a real reason to go? Quite a weird question, but I am just not sure, and I'd be happy to hear your thoughts. submitted by /u/meni_s [link] [comments]

- [P] Panza: A personal email assistant, trained and running on-deviceby /u/eldar_ciki (Machine Learning) on May 2, 2024 at 9:07 pm

Tired of crafting well-polished emails and wish you had an assistant to take over the hard work while mimicking your writing style? Introducing Panza, a personalized LLM email assistant that runs entirely on your device! Choose between Llama-3 or Mistral, tailor it to your unique style, and let it write the emails for you. Take a look at our demo and give it a try on your emails at: https://github.com/IST-DASLab/PanzaMail Some technical details about Panza: Panza is an automated email assistant customized to your writing style and past email history. Panza produces a fine-tuned LLM that matches your writing style, pairing it with a Retrieval-Augmented Generation (RAG) component which helps it produce relevant emails. Panza **can be trained and run entirely locally**. Currently, it requires a single GPU with 16-24 GiB of memory, but we also plan to release a CPU-only version. Training and execution are also quick - for a dataset on the order of 1000 emails, training Panza takes well under an hour, and generating a new email takes a few seconds at most. submitted by /u/eldar_ciki [link] [comments]

- AWS Inferentia and AWS Trainium deliver lowest cost to deploy Llama 3 models in Amazon SageMaker JumpStartby Xin Huang (AWS Machine Learning Blog) on May 2, 2024 at 9:07 pm

Today, we’re excited to announce the availability of Meta Llama 3 inference on AWS Trainium and AWS Inferentia based instances in Amazon SageMaker JumpStart. The Meta Llama 3 models are a collection of pre-trained and fine-tuned generative text models. Amazon Elastic Compute Cloud (Amazon EC2) Trn1 and Inf2 instances, powered by AWS Trainium and AWS

- [Discussion] Seeking help to find the better GPU setup. Three H100 vs Five A100?by /u/nlpbaz (Machine Learning) on May 2, 2024 at 7:49 pm

Long story short, a company has a budget for buying GPUs expected to fine-tune LLMs(probably 70B ones), and I have to the research to find which GPU setup is the best with respect to their budget. The budget can buy three H100 GPUs or five A100 GPUs. I tried my best but until now is not clear to me which of these setups is better. While five A100s have more VRAM, they say H100 are 2-8 times faster than A100s! I'm seeking help. Any valuable insights will be appreciated. submitted by /u/nlpbaz [link] [comments]

- [D] Something I always think about, for top conferences like ICML, NeurIPS, CVPR,..etc. How many papers are really groundbreaking?by /u/oddhvdfscuyg (Machine Learning) on May 2, 2024 at 6:37 pm

I have some papers in top venus myself, but whenever I sit down and be brutually honest with myself. I feel my work is good but it is just not that impactful, like one more brick in the wall. I wonder how often we can see something as impactful as "Attention is all you need" for example. submitted by /u/oddhvdfscuyg [link] [comments]

- [D] Has anyone successfully gotten into ML consulting?by /u/20231027 (Machine Learning) on May 2, 2024 at 6:14 pm

Please share your journey and lessons. Thanks! submitted by /u/20231027 [link] [comments]

- [D] Benchmark creators should release their benchmark datasets in stagesby /u/kei147 (Machine Learning) on May 2, 2024 at 5:36 pm

There's been a lot of discussion about benchmark contamination, where models are trained on the data they are ultimately evaluated on. For example, a recent paper showed that models performed substantially better on the public GSM8K vs GSM1K, which was a benchmark recently created by Scale AI to match GSM8K on difficulty and other measures. Because of these concerns about benchmark contamination, it is often hard to take a research lab's claims about model performance at face value. It's difficult to know whether a model gets good benchmark performance because it is generally capable or because its pre-training data was contaminated and it overfit on the benchmarks. One solution to this problem is for benchmark creators to release their datasets in stages. For example, a benchmark creator could release 50% of their dataset upon release, and then release the remaining 50% in two stages, 25% one year later and 25% two years later. This would enable model evaluators to check for benchmark contamination by comparing performance on the subset of data released prior to the training cutoff vs. the subset released after the training cutoff. It would also give us a better understanding of how well models are actually performing. One last point - this staged release process wouldn't be anywhere near as helpful for benchmarks created by scraping the web, as even the later-released data subsets could be found in the training data. But it should be useful for other kinds of benchmarks. submitted by /u/kei147 [link] [comments]

- [D] where to store a lot of dataframes of ML featureby /u/Logical_Ad8570 (Machine Learning) on May 2, 2024 at 5:27 pm

HI all I have a lot of pandas dataframes representing features that will be used to train my ML models. To provide more context: Each pandas dataframe is a collection of timeseries (1 column, 1 timeseries) created based on the combination of 5 parameters. Each of these parameters can have up to 5 different values, and one combination of parameters defines one dataframe. This means that I have approximately 2,000 dataframes with a shape of (3000, 1000). The only requirement I have is to be able to access them efficiently. I don't need to access all of them every time. I've considered using a SQL dataframe where the name of each table is the parameter combination, but perhaps there are better ways to do this. Any advice from someone who has already dealt with a similar problem? submitted by /u/Logical_Ad8570 [link] [comments]

- [P] spRAG - Open-source RAG implementation for challenging real-world tasksby /u/zmccormick7 (Machine Learning) on May 2, 2024 at 4:50 pm

Hey everyone, I’m Zach from Superpowered AI (YC S22). We’ve been working in the RAG space for a little over a year now, and we’ve recently decided to open-source all of our core retrieval tech. [spRAG](https://github.com/SuperpoweredAI/spRAG) is a retrieval system that’s designed to handle complex real-world queries over dense text, like legal documents and financial reports. As far as we know, it produces the most accurate and reliable results of any RAG system for these kinds of tasks. For example, on FinanceBench, which is an especially challenging open-book financial question answering benchmark, spRAG gets 83% of questions correct, compared to 19% for the vanilla RAG baseline (which uses Chroma + OpenAI Ada embeddings + LangChain). You can find more info about how it works and how to use it in the project’s README. We’re also very open to contributions. We especially need contributions around integrations (i.e. adding support for more vector DBs, embedding models, etc.) and around evaluation. Happy to answer any questions! [GitHub repo](https://github.com/SuperpoweredAI/spRAG) submitted by /u/zmccormick7 [link] [comments]

- Revolutionize Customer Satisfaction with tailored reward models for your business on Amazon SageMakerby Dinesh Subramani (AWS Machine Learning Blog) on May 2, 2024 at 4:19 pm

As more powerful large language models (LLMs) are used to perform a variety of tasks with greater accuracy, the number of applications and services that are being built with generative artificial intelligence (AI) is also growing. With great power comes responsibility, and organizations want to make sure that these LLMs produce responses that align with

- Amazon Personalize launches new recipes supporting larger item catalogs with lower latencyby Jingwen Hu (AWS Machine Learning Blog) on May 2, 2024 at 3:58 pm

We are excited to announce the general availability of two advanced recipes in Amazon Personalize, User-Personalization-v2 and Personalized-Ranking-v2 (v2 recipes), which are built on the cutting-edge Transformers architecture to support larger item catalogs with lower latency. In this post, we summarize the new enhancements, and guide you through the process of training a model and providing recommendations for your users.

- Get started with Amazon Titan Text Embeddings V2: A new state-of-the-art embeddings model on Amazon Bedrockby Shreyas Subramanian (AWS Machine Learning Blog) on May 2, 2024 at 2:41 pm

Embeddings are integral to various natural language processing (NLP) applications, and their quality is crucial for optimal performance. They are commonly used in knowledge bases to represent textual data as dense vectors, enabling efficient similarity search and retrieval. In Retrieval Augmented Generation (RAG), embeddings are used to retrieve relevant passages from a corpus to provide

- [D] Paper accepted to ICML but not attending in person?by /u/Normal-Comparison-60 (Machine Learning) on May 2, 2024 at 2:04 pm

Paper just got accepted to ICML. Tbh it was a happy surprise. Unfortunately for both authors we either do not have a return visa to the US, or with high probability will not have a non-expired passport in July for the conference. I wonder if it is acceptable to pay for the conference registration fee $475, but not attending, and still have our paper published in the proceedings. I notice that conference registration does include virtual access to all the sessions and tutorials. But I am unsure about the publication part. submitted by /u/Normal-Comparison-60 [link] [comments]

- [D] Why do juniors (undergraduates or first- to second-year PhD students) have so many papers at major machine learning conferences like ICML, ICLR, NeurIPS, etc.?by /u/ShiftStrange1701 (Machine Learning) on May 2, 2024 at 11:56 am

Hello everyone, today the ICML results are out, congratulations to all those who have papers accepted here. I'm not an academic myself, but sometimes I read papers at these conferences for work, and it's really interesting. I just have a question: why do juniors have so many papers at these conferences? I thought this was something you would have to learn throughout your 5 years of PhD and almost only achieve in the final years of your PhD. Furthermore, I've heard that to get into top PhD programs in the US, you need to have some papers beforehand. So, if a junior can publish papers early like that, why do they have to spend 5 long years pursuing a PhD? submitted by /u/ShiftStrange1701 [link] [comments]

- [D] How can I detect the text orientation using MMOCR or MMDET models?by /u/tmargary (Machine Learning) on May 2, 2024 at 8:22 am

My training images have texts that appear in various orientations on the image. As a result, I don't know what's their original orientation since for example DBNetPP does not return the bbox angles in the corners in a natural orientation order. How can I solve this issue? I have tried other pretrained detection models, but they also does not do that, maybe because they were not trained on rotated images. How can I solve this issue? https://preview.redd.it/tvq6fp9k3zxc1.png?width=1000&format=png&auto=webp&s=ecf3f3e757e6450e34c1257f9eb8e0fec4ce7bba https://preview.redd.it/yea66hdl3zxc1.png?width=1000&format=png&auto=webp&s=4eafb6d4354c6a0d851d6b5fad456f99441d9bc2 submitted by /u/tmargary [link] [comments]

- [D] Modern best coding practices for Pytorch (for research)?by /u/SirBlobfish (Machine Learning) on May 1, 2024 at 9:24 pm

Hi all, I've been using Pytorch since 2019, and it has changed a lot in that time (especially since huggingface). Are there any modern guides/style-docs/example-repos you would recommend? For example, are namedtensors a good/common practice? Is Pytorch Lightning recommended? What are the best config management tools these days? How often do you use torch.script or torch.compile? submitted by /u/SirBlobfish [link] [comments]

- Simple guide to training Llama 2 with AWS Trainium on Amazon SageMakerby Marco Punio (AWS Machine Learning Blog) on May 1, 2024 at 6:53 pm

Large language models (LLMs) are making a significant impact in the realm of artificial intelligence (AI). Their impressive generative abilities have led to widespread adoption across various sectors and use cases, including content generation, sentiment analysis, chatbot development, and virtual assistant technology. Llama2 by Meta is an example of an LLM offered by AWS. Llama

- [P] I reproduced Anthropic's recent interpretability researchby /u/neverboosh (Machine Learning) on May 1, 2024 at 5:51 pm

Not that many people are paying attention to LLM interpretability research when capabilities research is moving as fast as it currently is, but interpretability is really important and in my opinion, really interesting and exciting! Anthropic has made a lot of breakthroughs in recent months, the biggest one being "Towards Monosemanticity". The basic idea is that they found a way to train a sparse autoencoder to generate interpretable features based on transformer activations. This allows us to look at the activations of a language model during inference, and understand which parts of the model are most responsible for predicting each next token. Something that really stood out to me was that the autoencoders they train to do this are actually very small, and would not require a lot of compute to get working. This gave me the idea to try to replicate the research by training models on my M3 Macbook. After a lot of reading and experimentation, I was able to get pretty strong results! I wrote a more in-depth post about it on my blog here: https://jakeward.substack.com/p/monosemanticity-at-home-my-attempt I'm now working on a few follow-up projects using this tech, as well as a minimal implementation that can run in a Colab notebook to make it more accessible. If you read my blog, I'd love to hear any feedback! submitted by /u/neverboosh [link] [comments]

- [R] KAN: Kolmogorov-Arnold Networksby /u/SeawaterFlows (Machine Learning) on May 1, 2024 at 5:03 pm

Paper: https://arxiv.org/abs/2404.19756 Code: https://github.com/KindXiaoming/pykan Quick intro: https://kindxiaoming.github.io/pykan/intro.html Documentation: https://kindxiaoming.github.io/pykan/ Abstract: Inspired by the Kolmogorov-Arnold representation theorem, we propose Kolmogorov-Arnold Networks (KANs) as promising alternatives to Multi-Layer Perceptrons (MLPs). While MLPs have fixed activation functions on nodes ("neurons"), KANs have learnable activation functions on edges ("weights"). KANs have no linear weights at all -- every weight parameter is replaced by a univariate function parametrized as a spline. We show that this seemingly simple change makes KANs outperform MLPs in terms of accuracy and interpretability. For accuracy, much smaller KANs can achieve comparable or better accuracy than much larger MLPs in data fitting and PDE solving. Theoretically and empirically, KANs possess faster neural scaling laws than MLPs. For interpretability, KANs can be intuitively visualized and can easily interact with human users. Through two examples in mathematics and physics, KANs are shown to be useful collaborators helping scientists (re)discover mathematical and physical laws. In summary, KANs are promising alternatives for MLPs, opening opportunities for further improving today's deep learning models which rely heavily on MLPs. https://preview.redd.it/r7vjmp31juxc1.png?width=2326&format=png&auto=webp&s=a2c722cf733510194659b9aaec24269a7f9e5d47 submitted by /u/SeawaterFlows [link] [comments]

- Fine-tune and deploy language models with Amazon SageMaker Canvas and Amazon Bedrockby Yann Stoneman (AWS Machine Learning Blog) on May 1, 2024 at 4:31 pm

Imagine harnessing the power of advanced language models to understand and respond to your customers’ inquiries. Amazon Bedrock, a fully managed service providing access to such models, makes this possible. Fine-tuning large language models (LLMs) on domain-specific data supercharges tasks like answering product questions or generating relevant content. In this post, we show how Amazon

- Improving inclusion and accessibility through automated document translation with an open source app using Amazon Translateby Philip Whiteside (AWS Machine Learning Blog) on May 1, 2024 at 4:20 pm

Organizations often offer support in multiple languages, saying “contact us for translations.” However, customers who don’t speak the predominant language often don’t know that translations are available or how to request them. This can lead to poor customer experience and lost business. A better approach is proactively providing information in multiple languages so customers can

- Automate chatbot for document and data retrieval using Agents and Knowledge Bases for Amazon Bedrockby Jundong Qiao (AWS Machine Learning Blog) on May 1, 2024 at 4:02 pm

Numerous customers face challenges in managing diverse data sources and seek a chatbot solution capable of orchestrating these sources to offer comprehensive answers. This post presents a solution for developing a chatbot capable of answering queries from both documentation and databases, with straightforward deployment. Amazon Bedrock is a fully managed service that offers a choice

- Build private and secure enterprise generative AI apps with Amazon Q Business and AWS IAM Identity Centerby Abhinav Jawadekar (AWS Machine Learning Blog) on April 30, 2024 at 10:49 pm

As of April 30, 2024 Amazon Q Business is generally available. Amazon Q Business is a conversational assistant powered by generative artificial intelligence (AI) that enhances workforce productivity by answering questions and completing tasks based on information in your enterprise systems. Your employees can access enterprise content securely and privately using web applications built with

- Enhance customer service efficiency with AI-powered summarization using Amazon Transcribe Call Analyticsby Ami Dani (AWS Machine Learning Blog) on April 30, 2024 at 7:58 pm

In the fast-paced world of customer service, efficiency and accuracy are paramount. After each call, contact center agents often spend up to a third of the total call time summarizing the customer conversation. Additionally, manual summarization can lead to inconsistencies in the style and level of detail due to varying interpretations of note-taking guidelines. This

- Accelerate software development and leverage your business data with generative AI assistance from Amazon Qby Swami Sivasubramanian (AWS Machine Learning Blog) on April 30, 2024 at 12:16 pm

We believe generative artificial intelligence (AI) has the potential to transform virtually every customer experience. To make this possible, we’re rapidly innovating to provide the most comprehensive set of capabilities across the three layers of the generative AI stack. This includes the bottom layer with infrastructure to train Large Language Models (LLMs) and other Foundation

- Amazon Q Business and Amazon Q in QuickSight empowers employees to be more data-driven and make better, faster decisions using company knowledgeby Mukesh Karki (AWS Machine Learning Blog) on April 30, 2024 at 12:14 pm

Today, we announced the General Availability of Amazon Q, the most capable generative AI powered assistant for accelerating software development and leveraging companies’ internal data. “During the preview, early indications signaled Amazon Q could help our customers’ employees become more than 80% more productive at their jobs; and with the new features we’re planning on

- Develop and train large models cost-efficiently with Metaflow and AWS Trainiumby Ville Tuulos (AWS Machine Learning Blog) on April 29, 2024 at 7:20 pm

This is a guest post co-authored with Ville Tuulos (Co-founder and CEO) and Eddie Mattia (Data Scientist) of Outerbounds. To build a production-grade AI system today (for example, to do multilingual sentiment analysis of customer support conversations), what are the primary technical challenges? Historically, natural language processing (NLP) would be a primary research and development

- Cohere Command R and R+ are now available in Amazon SageMaker JumpStartby Pradeep Prabhakaran (AWS Machine Learning Blog) on April 29, 2024 at 5:47 pm

This blog post is co-written with Pradeep Prabhakaran from Cohere. Today, we are excited to announce that Cohere Command R and R+ foundation models are available through Amazon SageMaker JumpStart to deploy and run inference. Command R/R+ are the state-of-the-art retrieval augmented generation (RAG)-optimized models designed to tackle enterprise-grade workloads. In this post, we walk through how

- Revolutionizing large language model training with Arcee and AWS Trainiumby Mark McQuade (AWS Machine Learning Blog) on April 29, 2024 at 3:21 pm

This is a guest post by Mark McQuade, Malikeh Ehghaghi, and Shamane Siri from Arcee. In recent years, large language models (LLMs) have gained attention for their effectiveness, leading various industries to adapt general LLMs to their data for improved results, making efficient training and hardware availability crucial. At Arcee, we focus primarily on enhancing

- Databricks DBRX is now available in Amazon SageMaker JumpStartby Shikhar Kwatra (AWS Machine Learning Blog) on April 26, 2024 at 7:52 pm

Today, we are excited to announce that the DBRX model, an open, general-purpose large language model (LLM) developed by Databricks, is available for customers through Amazon SageMaker JumpStart to deploy with one click for running inference. The DBRX LLM employs a fine-grained mixture-of-experts (MoE) architecture, pre-trained on 12 trillion tokens of carefully curated data and

- Knowledge Bases in Amazon Bedrock now simplifies asking questions on a single documentby Suman Debnath (AWS Machine Learning Blog) on April 26, 2024 at 7:12 pm

At AWS re:Invent 2023, we announced the general availability of Knowledge Bases for Amazon Bedrock. With Knowledge Bases for Amazon Bedrock, you can securely connect foundation models (FMs) in Amazon Bedrock to your company data for fully managed Retrieval Augmented Generation (RAG). In previous posts, we covered new capabilities like hybrid search support, metadata filtering

- Deploy a Hugging Face (PyAnnote) speaker diarization model on Amazon SageMaker as an asynchronous endpointby Sanjay Tiwary (AWS Machine Learning Blog) on April 25, 2024 at 5:03 pm

Speaker diarization, an essential process in audio analysis, segments an audio file based on speaker identity. This post delves into integrating Hugging Face’s PyAnnote for speaker diarization with Amazon SageMaker asynchronous endpoints. We provide a comprehensive guide on how to deploy speaker segmentation and clustering solutions using SageMaker on the AWS Cloud.

- Evaluate the text summarization capabilities of LLMs for enhanced decision-making on AWSby Dinesh Subramani (AWS Machine Learning Blog) on April 25, 2024 at 4:25 pm

Organizations across industries are using automatic text summarization to more efficiently handle vast amounts of information and make better decisions. In the financial sector, investment banks condense earnings reports down to key takeaways to rapidly analyze quarterly performance. Media companies use summarization to monitor news and social media so journalists can quickly write stories on

- Enhance conversational AI with advanced routing techniques with Amazon Bedrockby Ameer Hakme (AWS Machine Learning Blog) on April 24, 2024 at 4:30 pm

Conversational artificial intelligence (AI) assistants are engineered to provide precise, real-time responses through intelligent routing of queries to the most suitable AI functions. With AWS generative AI services like Amazon Bedrock, developers can create systems that expertly manage and respond to user requests. Amazon Bedrock is a fully managed service that offers a choice of

- [D] Simple Questions Threadby /u/AutoModerator (Machine Learning) on April 21, 2024 at 3:00 pm

Please post your questions here instead of creating a new thread. Encourage others who create new posts for questions to post here instead! Thread will stay alive until next one so keep posting after the date in the title. Thanks to everyone for answering questions in the previous thread! submitted by /u/AutoModerator [link] [comments]

Download AWS machine Learning Specialty Exam Prep App on iOs

Download AWS Machine Learning Specialty Exam Prep App on Android/Web/Amazon

A Twitter List by enoumenDownload AWS machine Learning Specialty Exam Prep App on iOs

Download AWS Machine Learning Specialty Exam Prep App on Android/Web/Amazon