You can translate the content of this page by selecting a language in the select box.

Azure AI Fundamentals AI-900 Exam Preparation: Azure AI 900 is an opportunity to demonstrate knowledge of common ML and AI workloads and how to implement them on Azure. This exam is intended for candidates with both technical and non-technical backgrounds. Data science and software engineering experience are not required; however, some general programming knowledge or experience would be beneficial.

Azure AI Fundamentals can be used to prepare for other Azure role-based certifications like Azure Data Scientist Associate or Azure AI Engineer Associate, but it’s not a prerequisite for any of them.

This Azure AI Fundamentals AI-900 Exam Preparation App provides Basics and Advanced Machine Learning Quizzes and Practice Exams on Azure, Azure Machine Learning Job Interviews Questions and Answers, Machine Learning Cheat Sheets.

Download Azure AI 900 on Windows10/11

Azure AI Fundamentals AI-900 Exam Preparation App Features:

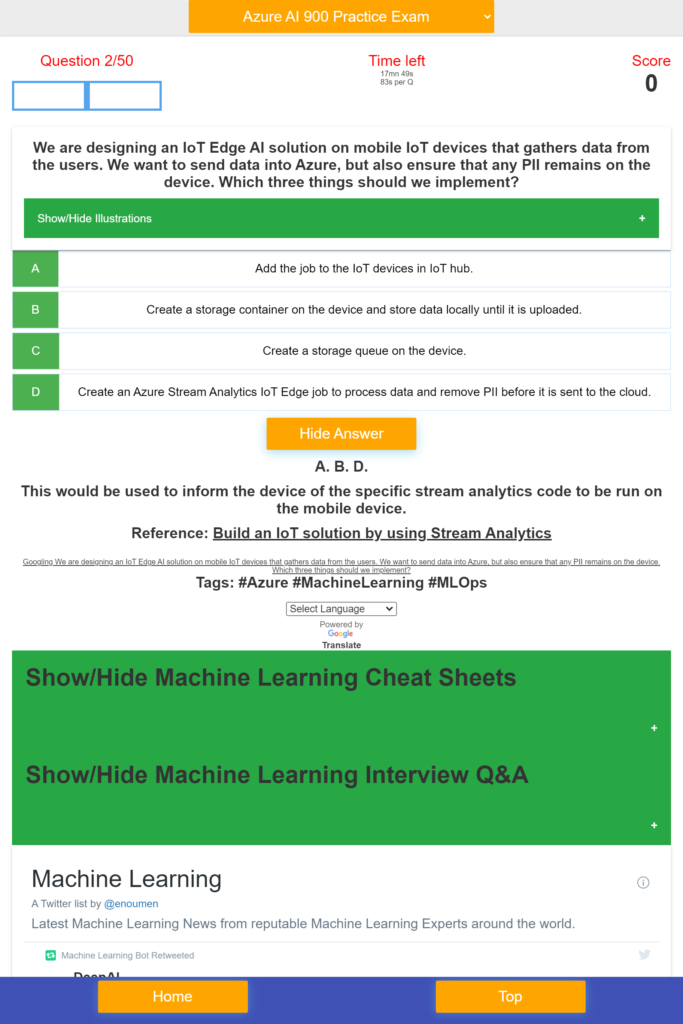

– Azure AI-900 Questions and Detailed Answers and References

– Machine Learning Basics Questions and Answers

– Machine Learning Advanced Questions and Answers

– NLP and Computer Vision Questions and Answers

– Scorecard

– Countdown timer

– Machine Learning Cheat Sheets

– Machine Learning Interview Questions and Answers

– Machine Learning Latest News

This Azure AI Fundamentals AI-900 Exam Prep App covers:

- ML implementation and Operations,

- Describe Artificial Intelligence workloads and considerations,

- Describe fundamental principles of machine learning on Azure,

- Describe features of computer vision workloads on Azure,

- Describe features of Natural Language Processing (NLP) workloads on Azure ,

- Describe features of conversational AI workloads on Azure,

- QnA Maker service, Language Understanding service (LUIS), Speech service, Translator Text service, Form Recognizer service, Face service, Custom Vision service, Computer Vision service, facial detection, facial recognition, and facial analysis solutions, optical character recognition solutions, object detection solutions, image classification solutions, azure Machine Learning designer, automated ML UI, conversational AI workloads, anomaly detection workloads, forecasting workloads identify features of anomaly detection work, Kafka, SQl, NoSQL, Python, linear regression, logistic regression, Sampling, dataset, statistical interaction, selection bias, non-Gaussian distribution, bias-variance trade-off, Normal Distribution, correlation and covariance, Point Estimates and Confidence Interval, A/B Testing, p-value, statistical power of sensitivity, over-fitting and under-fitting, regularization, Law of Large Numbers, Confounding Variables, Survivorship Bias, univariate, bivariate and multivariate, Resampling, ROC curve, TF/IDF vectorization, Cluster Sampling, etc.

- This App can help you:

- – Identify features of common AI workloads

- – identify prediction/forecasting workloads

- – identify features of anomaly detection workloads

- – identify computer vision workloads

- – identify natural language processing or knowledge mining workloads

- – identify conversational AI workloads

- – Identify guiding principles for responsible AI

- – describe considerations for fairness in an AI solution

- – describe considerations for reliability and safety in an AI solution

- – describe considerations for privacy and security in an AI solution

- – describe considerations for inclusiveness in an AI solution

- – describe considerations for transparency in an AI solution

- – describe considerations for accountability in an AI solution

- – Identify common types of computer vision solution:

- – Identify Azure tools and services for computer vision tasks

- – identify features and uses for key phrase extraction

- – identify features and uses for entity recognition

- – identify features and uses for sentiment analysis

- – identify features and uses for language modeling

- – identify features and uses for speech recognition and synthesis

- – identify features and uses for translation

- – identify capabilities of the Text Analytics service

- – identify capabilities of the Language Understanding service (LUIS)

- – etc.

Download Azure AI 900 on Windows10/11

Azure AI Fundamentals Breaking News – Azure AI Fundamentals Certifications Testimonials

Download Azure AI 900 on Windows10/11

A Twitter List by enoumen